Ultrasound is perhaps best known as the technology that enables non-invasive body scans, underwater communication, and to help us park our cars. A young startup called Sonair out of Norway wants to employ it for something else: 3D computer vision used in autonomous hardware applications.

Sonair’s founder and CEO Knut Sandven believes the company’s application of ultrasound technology — a groundbreaking approach that reads sound waves to detect people and objects in 3D, with minimal energy and computational requirements — can be the basis of more useful and considerably less expensive solutions than today’s more standard approach using LIDAR.

Sonair has now raised $6 million in funding from early-stage specialists Skyfall and RunwayFBU, and is rolling out early access to its tech. Initially, it will be aimed at autonomous mobile robot developers, but its vision (heh) is to see it being used in other applications.

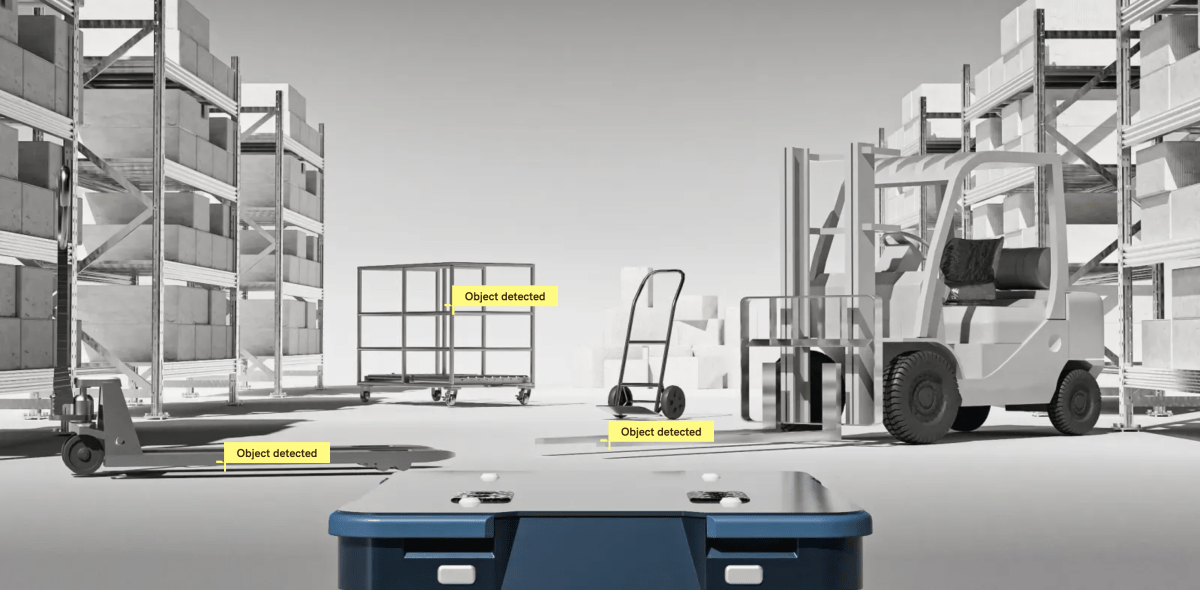

“Our go-to-market plan is to start with robots, specifically autonomous mobile robots [AMRs] — moving stuff from A to B,” Sandven said. “We are starting indoors — a limitation to give us focus, [but] we will, of course, expand into the other robotic categories and into automotive in the long term.”

The name Sonair is a play on the 3D capabilities of water-traveling sonar, but applied to sensors that read signals in the air — effectively what the startup has produced.

Sandven is an engineer and entrepreneur whose previous startup, GasSecure, built gas sensors based on MEMS technology — microelectromechanical systems combining mechanical and electronic components to create miniature devices with moving parts. (The petrochemical industry is a major part of Norway’s national economy.)

After GasSecure was acquired by a German industrial safety specialist, Sandven started thinking about other uses of MEMS technology, and looked into research from SINTEF, a group that works with Norway’s main science and technological university to commercialize research. Dozens of companies have spun out of the group’s work over the years.

SINTEF had created a new kind of MEMS-related ultrasonic sensor, he said, “which was ready for commercialization.” Sandven acquired the IP for the technology (specifically for acoustic ranging and detection), recruited the researchers who created it, and Sonair was born.

Although LIDAR has been a standard part of autonomous systems development in recent years, there is still space for complementary or alternative approaches. LIDAR is still considered expensive; it has issues with range; and still faces interference from light sources, certain surfaces and materials.

While companies like Mobileye are looking more seriously at other alternatives like radar, Sandven believes Sonair has a viable chance, since its technology will reduce the overall cost of sensor packages by between 50% and 80%.

“Our mission is to replace LIDAR,” he said.

The ultrasound sensors and related software that Sonair has built to “read” the data from the sensors does not work in a vacuum. Like LIDAR, it works in concert with cameras to triangulate and provide more accurate pictures to the autonomous system in question.

Sonair’s ultrasound tech is based on a “beamforming” method, which is also what is used in radar. The company says the data it picks up is then processed and combined using AI — specifically object recognition algorithms — to create spatial information from sound waves. The hardware using the technology — in initial cases, mobile robots — gets a 180-degree field of view with a range of five meters, and can use fewer sensors while addressing some of the shortcomings of LIDAR.

There are some interesting ideas still to be explored here. The company’s focus right now is on new techniques to improve how well autonomous systems can understand the objects in front of them. The tech, however, is small and also has the potential to be applied in other form factors. Could it be, for example, used in handsets or wearables as a complement or replacement for pressure-based haptic feedback?

“What’s being done today by other companies is [focused on] touch sensors,” Sandven said. “After you touch something, the device will measure the pressure or how hard or soft you’re touching. Where we come in is the moment before you touch. So if you have a hand approaching something, you can respond already with our technology. You can move very fast towards the objects. But then you get precision distance measurements, so you can be very soft in the touch. It’s not what we’re doing right now, but it’s something we could do.”

Sagar Chandna from RunwayFBU projects that 2024 will see 200,000 autonomous mobile robots produced, working out to a market of $1.4 billion. That gives Sonair an immediate market opportunity as a less-expensive alternative for computer vision components.

“With reduced costs in sensor technology and AI advancements in perception and decision-making, industries from manufacturing to healthcare are poised to benefit,” said Skyfall partner Preben Songe-Møller.